22+ markov chain calculator

To Markov Chains Computations. And Up-to 10 Columns and.

Markov Model An Overview Sciencedirect Topics

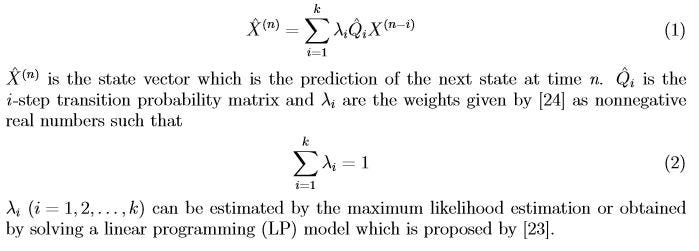

N 0 is a Markov chain and let nk.

. A Markov Chain has no memory meaning that the joint distribution of how many individuals will be in each allowed state depends only on how many were in each state the moment before not. In this example we will model a very simple Markov Chain of a Patient that transitions from a Well state to a Post-Stroke state and Dead state. By FUKUDA Hiroshi 20041012 Input probability matrix P P ij transition probability from i to j.

For larger size matrices use. Get it now. Problems and Tentative Solutions.

An absorbing Markov chain is a Markov chain in which it is impossible to leave some states and any state could. K 0 be. S n S 0 P n.

Markov Chains are a series of transitions in a finite state space in discrete time where the probability of transition only. This site is a part of the JavaScript E-labs learning objects for decision. Occurrences inside occurrences totalFor large k might see.

The system is memoryless. Probability vector in stable state. However to briefly summarise the articles above.

Px probability of sequence xP x P x k x k-1 x 1 Sequence models Joint probability of each base Estimating Px. Matrix Multiplication and Markov Chain Calculator-II. A Markov Chain is a sequence of time-discrete transitions under the Markov Property with a finite state space.

Enter transition matrix and initial state vector. The following formula is in a matrix form S 0 is a vector and P is a matrix. Calculator for finite Markov chain.

What 5 concepts are covered in the Markov Chain Calculator. P - transition matrix contains the probabilities to. A concise way of.

S0 - the initial state vector. Search for jobs related to Markov chain calculator or hire on the worlds largest freelancing marketplace with 21m jobs. The power to raise a number.

This site is a part of the JavaScript E-labs learning. In this article we will discuss The Chapman. Exercise 221 Subchain from a Markov chain Assume X Xn.

A common type of Markov chain with transient states is an absorbing one. A fact or a rule written with mathematical symbols. General Markov Chains For a general Markov chain with states 01M the n-step transition from i to j means the process goes from i to j in n time steps Let m be a non-negative integer.

Calculator for Matrices Up-to 10 Rows. 06 04 03 07. Its free to sign up and bid on jobs.

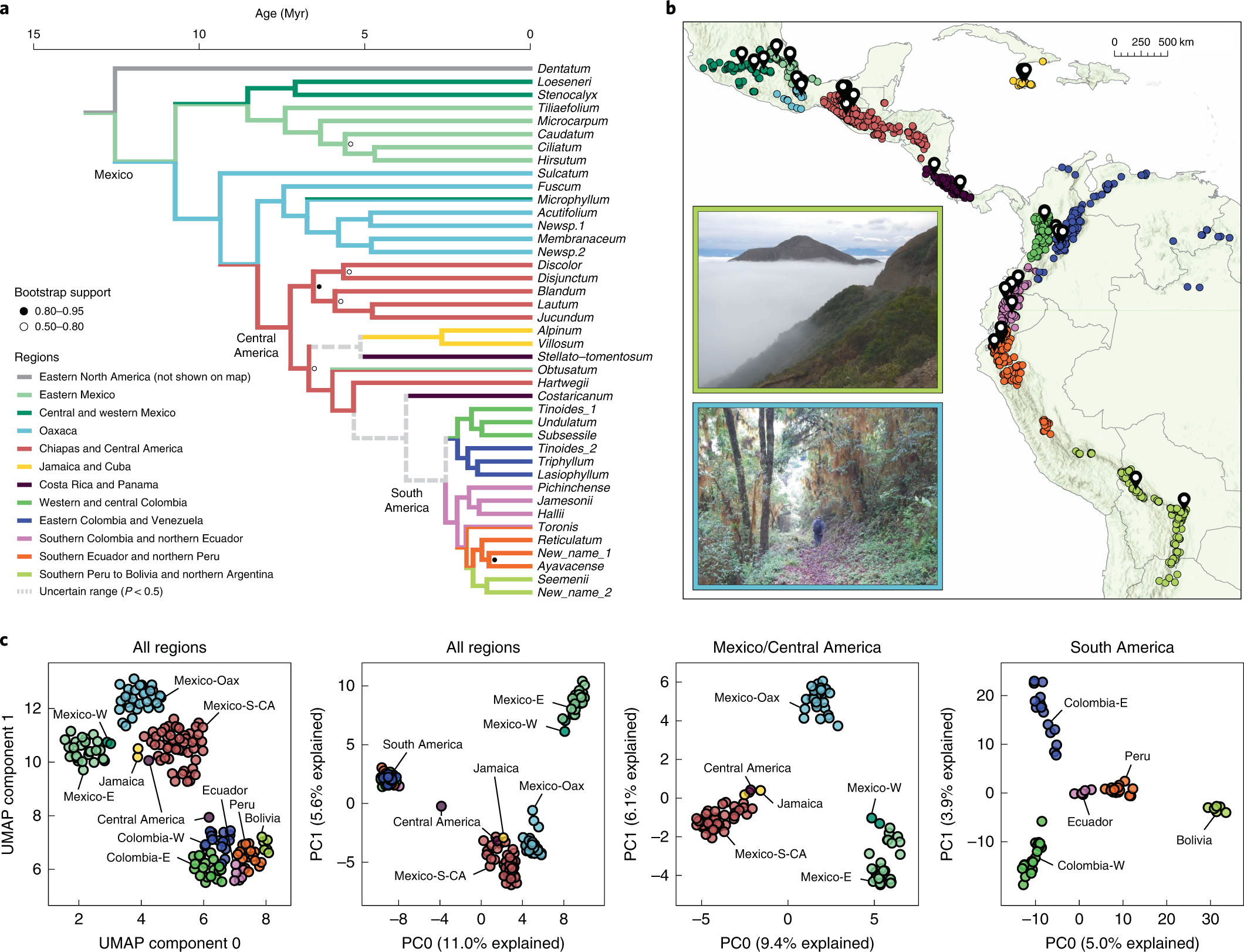

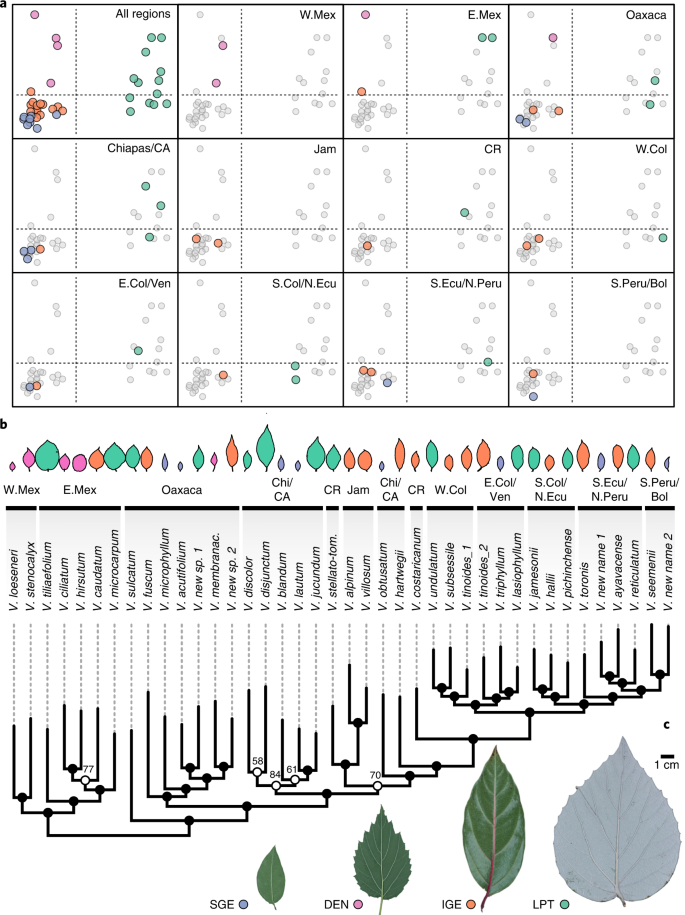

Replicated Radiation Of A Plant Clade Along A Cloud Forest Archipelago Nature Ecology Evolution

Ca Markov Approach In Dynamic Modelling Of Lulcc Using Esa Cci Products Over Zambia Intechopen

Markov Chain Attribution Modeling Complete Guide Adequate

Simchi Levi Zhao 04 Pdf Markov Chain Supply Chain

Markov Chain Matrix

Transition Probability An Overview Sciencedirect Topics

Replicated Radiation Of A Plant Clade Along A Cloud Forest Archipelago Nature Ecology Evolution

Markov Chain Calculator Model And Calculate Markov Chain Easily Using The Wizard Based Software Youtube

Intermittent Demand Forecasting Using Markov Chains By Aman Sahotra Analytics Vidhya Medium

Book Pdf Pdf Markov Chain Theoretical Computer Science

Ca Markov Approach In Dynamic Modelling Of Lulcc Using Esa Cci Products Over Zambia Intechopen

Markov Model To Compute The Probability On The N Th Day Mathematics Stack Exchange

Much More About Markov Chains Ppt Download

How To Build A Market Simulator Using Markov Chains And Python By Bassim Eledath Towards Data Science

Getting Started With Markov Chains Part 2 Revolutions

Markov Chain Calculator Model And Calculate Markov Chain Easily Using The Wizard Based Software Youtube

Github Miguelmota Markov Chain Calculate The Probability Of The Next Transition State Using Markov Chains